Since before the dawn of the computer age, scientists have been captivated by the idea of creating machines that could behave like humans. But only in the last decade has technology enabled some forms of artificial intelligence (AI) to become a reality.

Interest in putting AI to work has skyrocketed, with burgeoning array of AI use cases. Many surveys have found upwards of 90 percent of enterprises are either already using AI in their operations today or plan to in the near future.

Eager to capitalize on this trend, software vendors – both established AI companies and AI startups – have rushed to bring AI capabilities to market. Among vendors selling big data analytics and data science tools, two types of artificial intelligence have become particularly popular: machine learning and deep learning.

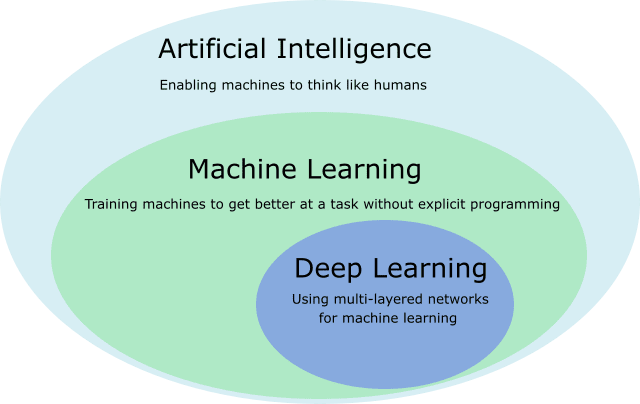

While many solutions carry the “AI,” “machine learning,” and/or “deep learning” labels, confusion about what these terms really mean persists in the market place. The diagram below provides a visual representation of the relationships among these different technologies:

As the graphic makes clear, machine learning is a subset of artificial intelligence. In other words, all machine learning is AI, but not all AI is machine learning.

Similarly, deep learning is a subset of machine learning. And again, all deep learning is machine learning, but not all machine learning is deep learning.

Also see: Top Machine Learning Companies

AI, machine learning and deep learning are each interrelated, with deep learning nested within ML, which in turn is part of the larger discipline of AI.

Computers excel at mathematics and logical reasoning, but they struggle to master other tasks that humans can perform quite naturally.

For example, human babies learn to recognize and name objects when they are only a few months old, but until recently, machines have found it very difficult to identify items in pictures. While any toddler can easily tell a cat from a dog from a goat, computers find that task much more difficult. In fact, captcha services sometimes use exactly that type of question to make sure that a particular user is a human and not a bot.

In the 1950s, scientists began discussing ways to give machines the ability to “think” like humans. The phrase “artificial intelligence” entered the lexicon in 1956, when John McCarthy organized a conference on the topic. Those who attended called for more study of “the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.”

Some of these early researchers believed it would be only a few years before they solved these problems. In reality, however, it took several decades for computer hardware and software to advance to the point where AI applications like image recognition, natural language processing and machine learning became possible.

Critics rightly point out that there is a big difference between an AI system that can tell the difference between cats and dogs and a computer that is truly intelligent in the same way as a human being. Most researchers believe that we are years or even decades away from creating an artificial general intelligence (also called strong AI) that seems to be conscious in the same way that humans beings are — if it will ever be possible to create such a system at all.

If artificial general intelligence does one day become a reality, it seems certain that machine learning will play a major role in the system’s capabilities.

Machine learning is the particular branch of AI concerned with teaching computers to “improve themselves,” as the attendees at that first artificial intelligence conference put it. Another 1950s computer scientist named Arthur Samuel defined machine learning as “the ability to learn without being explicitly programmed.”

In traditional computer programming, a developer tells a computer exactly what to do. Given a set of inputs, the system will return a set of outputs — just as its human programmers told it to.

Machine learning is different because no one tells the machine exactly what to do. Instead, they feed the machine data and allow it to learn on its own.

In general, machine learning takes three different forms:

Reinforcement learning is one of the oldest types of machine learning, and it is very useful in teaching a computer how to play a game.

For example, Arthur Samuel created one of the first programs that used reinforcement learning. It played checkers against human opponents and learned from its successes and mistakes. Over time, the software became much better at playing checkers.

Reinforcement learning is also useful for applications like autonomous vehicles, where the system can receive feedback about whether it has performed well or poorly and use that data to improve over time.

Supervised learning is particularly useful in classification applications such as teaching a system to tell the difference between pictures of dogs and pictures of cats.

In this case, you would feed the application a whole lot of images that had been previously tagged as either dogs or cats. From that training data, the computer would draw its own conclusions about what distinguishes the two types of animals, and it would be able to apply what it learned to new pictures.

As the system absorbed more data over time, it would become better and better at the task. This technique is said to be “supervised” because it requires humans to tag the images in advance and oversee the learning process.

By contrast, unsupervised learning does not rely on human beings to label training data for the system. Instead, the computer uses clustering algorithms or other mathematical techniques to find similarities among groups of data.

Unsupervised machine learning is particularly useful for the type of big data analytics that interests many enterprise leaders. For example, you could use unsupervised learning to spot similarities among groups of customers and better target your marketing or tailor your pricing.

Some recommendation engines rely on unsupervised learning to tell people who like one movie or book what other movies or books they might enjoy. Unsupervised learning can also help identify characteristics that might indicate a person’s credit worthiness or likelihood of filing an insurance claim.

Various AI applications, such as computer vision, natural language processing, facial recognition, text-to-speech, speech-to-text, knowledge engines, emotion recognition, and other types of systems, often make use of machine learning capabilities. Some combine two or more of the main types of machine learning, and in some cases, are said to be “semi-supervised” because they incorporate some of the techniques of supervised learning and some of the techniques of unsupervised learning. And some machine learning techniques — such as deep learning — can be supervised, unsupervised, or both.

The phrase “deep learning” first came into use in the 1980s, making it a much newer idea than either machine learning or artificial intelligence.

Deep learning describes a particular type of architecture that both supervised and unsupervised machine learning systems sometimes use. Specifically, it is a layered architecture where one layer takes an input and generates an output. It then passes that output on to the next layer in the architecture, which uses it to create another output. That output can then become the input for the next layer in the system, and so on. The architecture is said to be “deep” because it has many layers.

To create these layered systems, many researchers have designed computing systems modeled after the human brain. In broad terms, they call these deep learning systems artificial neural networks (ANNs). ANNs come in several different varieties, including deep neural networks, convolutional neural networks, recurrent neural networks and others. These neural networks use nodes that are similar to the neurons in a human brain.

Neural networks and deep learning have become much more popular over the last decade in part because hardware advances, particularly improvements in graphics processing units (GPUs), have made them much more feasible. Traditionally, system designers used GPUs to perform the advanced calculations necessary for displaying high-quality video and 3D gaming.

However, those GPUs also excel at the type of calculations necessary for deep learning. As GPU performance has improved and costs have decreased, people have been able to create high-performance systems that can complete deep learning tasks in much less time and for much less cost than would have been the case in the past.

Today, anyone can easily access deep learning capabilities through cloud services like Amazon Web Services, Microsoft Azure, Google Cloud and IBM Cloud.

If you are interested in learning more about AI vs machine learning vs deep learning, Datamation has several resources that can help, including the following:

SEE ALL

Datamation is the leading industry resource for B2B data professionals and technology buyers. Datamation's focus is on providing insight into the latest trends and innovation in AI, data security, big data, and more, along with in-depth product recommendations and comparisons. More than 1.7M users gain insight and guidance from Datamation every year.

Advertise with TechnologyAdvice on Datamation and our other data and technology-focused platforms.

Advertise with Us

Property of TechnologyAdvice.

© 2025 TechnologyAdvice. All Rights Reserved

Advertiser Disclosure: Some of the products that appear on this

site are from companies from which TechnologyAdvice receives

compensation. This compensation may impact how and where products

appear on this site including, for example, the order in which

they appear. TechnologyAdvice does not include all companies

or all types of products available in the marketplace.